TLDR: include() can be significantly faster than file_get_contents(), if certain conditions are met.

I’m building a simple page caching plugin to ship with Sail CLI for WordPress. I’ve already decided that the filesystem is going to be the primary storage method and ran some benchmarks against Redis and Memcached, the results were satisfying.

However, even with just the filesystem, there are quite a few different options to consider. One of these options is whether the PHP include() might be a better alternative to the regular file_get_contents() or readfile/fpassthru functions.

I provisioned a new 4-core 8GB server on DigitalOcean using Sail CLI. I wanted to make sure my benchmark test data fit into memory. The tested PHP version was 7.4.3 with Zend Engine v3.4.0 and Opcache v7.4.3.

The Benchmark

I wrote a create.php script which simulated some cache files, strings with HTML. I made sure they’re all unique too, just to make sure there’s no cheeky plays on both sides (used str_shuffle too in some tests).

$rounds = 10000;

$content = file_get_contents( 'https://konstantin.blog' );

$content_include = '<?php $data = "' .

addslashes( $content ) . '";';

for ( $i = 0; $i < $rounds; $i++ ) {

file_put_contents( "cache/raw/item.{$i}.raw",

$content . "\n<!-- {$i} -->" );

file_put_contents( "cache/include/item.{$i}.php",

$content_include . "\n// {$i}" );

}I ran the create.php file on my server, and made sure all files are in place. I had exactly 20,000 files. I then created the benchmark.php file, which simply loaded all these files in a loop:

$rounds = 10000;

function test_file_get_contents( $i ) {

file_get_contents( "cache/raw/item.{$i}.raw" );

}

function test_include( $i ) {

include( "cache/include/item.{$i}.php" );

}

for ( $i = 0; $i < $rounds; $i++ ) {

test_file_get_contents( $i );

test_include( $i );

}Default Settings

Before running the benchmark.php file I made sure that both the .raw files as well as the .php files were all in the kernel page cache, so we’re eliminating disk IO, reading the files directly from memory in both cases:

$ fincore raw/* | wc -l

10001

$ fincore include/* | wc -l

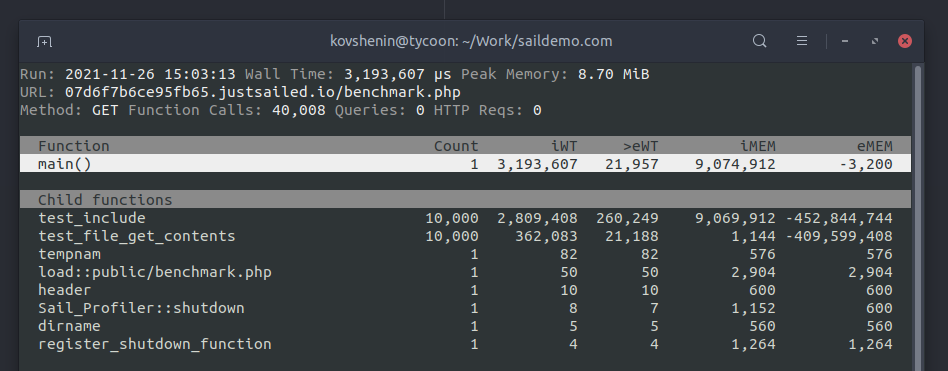

10001The first run wasn’t great for include().

This first run showed include() to be over 7 times slower than file_get_contents(). Honestly I expected it to be slower, but not by this much.

As opposed to file_get_contents(), which simply reads the file from disk (or the kernel’s page cache in this case), on a fresh run include() does the exact same thing, but in addition to that, it needs to parse the file as PHP code, and compile it. This takes quite some time it seems.

However, after it has done so, the compiled PHP opcode is placed into a special opcode cache in shared memory, by the Zend Opcode extension, which is pretty standard nowadays across most hosts. So the next time the same file is requested, it does not need to be parsed or compiled, the result is already available in memory.

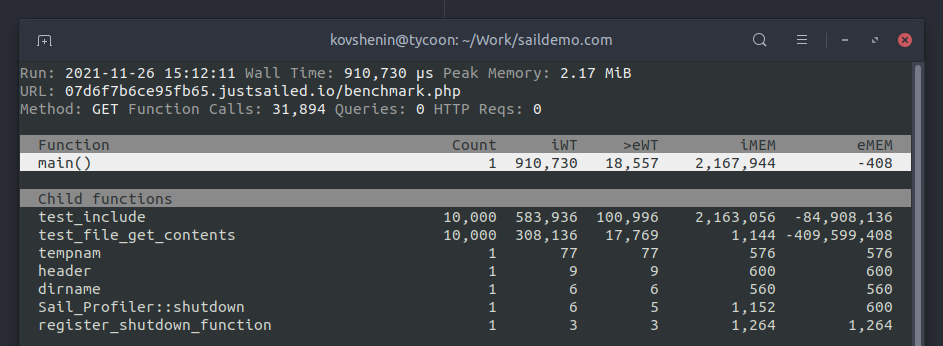

We can see this from the second run:

Much better this time, however still slower than file_get_contents(). This didn’t look too great so I started playing around with the Opcache configuration.

Increased memory consumption and max accelerated files

I added the opcache_is_script_cached() function to my loop, and counted the total number of cached scripts, to make sure it was 10,000. Unfortunately it was not. During the run above, only about 8700 files were in cached by Zend Opcache, which means the remaining 1300 still had to be read from disk (memory), parsed, compiled, etc.

So I dug around the opcache runtime configuration settings and found a few relevant limits, which I might have been been hitting.

The opcache.memory_consumption defaults to 128 megabytes. The total file size for the .php files was closer to 400mb, and sure, they might somehow be optimized before storing in opcache, but probably not to 128 mb. I increased this limit to 512 megabytes.

The second option I spotted was opcache.max_accelerated_files and it defaulted to exactly 10,000 files. The default WordPress installation ships with about 1k PHP files, many of which were likely in the opcache as well. I increased this limit to 50,000 files.

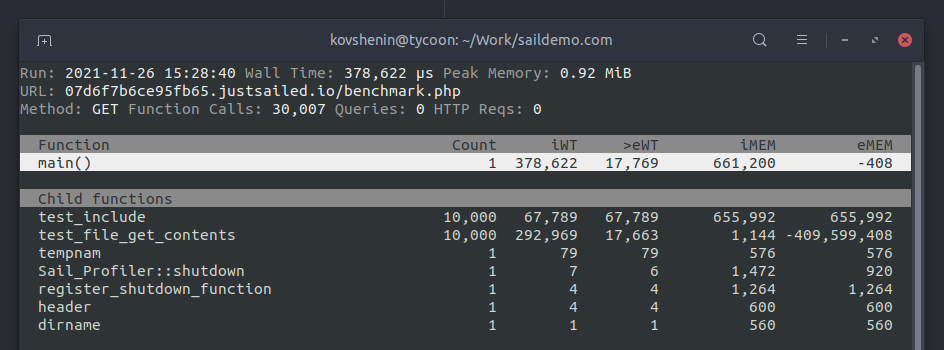

The results for include() looked so much better this time:

In this test, include() was about 4x faster than file_get_contents(). This is really good progress, but introduces a few things to keep in mind.

Replacement and Invalidation

The default PHP Opcache limits are fairly sane for most use cases, however if you hit those limits, you could be in trouble with include(), causing Opcache to stop adding new items, and re-parsing and re-compiling further include() calls instead, and thus very poor performance.

Opcache doesn’t have a replacement strategy, no FIFO or LRU, and when it’s full it’s full, it will simply stop storing new entries, and eventually just flush the entire cache. You could, manually opcache_invalidate() individual files if needed, however please note that invalidating files in Opcache does not free memory.

Instead it marks the invalidated entry as “waste”, and when the same file is compiled again, it’s stored in a different free space of the allocated shared memory segment. Once there is enough wasted space (max_wasted_percentage, defaults to 5%) and when Opcache runs out of free memory, it will simply schedule a restart.

Another thing to keep in mind is that your main application (in our case it’s WordPress) is also part of the same Opcache. A bare WordPress install is about 1k PHP files, some large plugins could also consume quite a few of those, for instance Automattic’s Jetpack is close to 900 PHP files. It’s possible to hit the default 10k limit with just core WordPress and a few bulky themes and/or plugins.

Not a great fit for page caching

While include() can sometimes be significantly faster than file_get_contents(), overall I don’t think it’s a great fit for page caching.

The 7x penalty of having to re-parse and re-compile the entire PHP file is way too much of a risk, and in a page caching plugin we can’t really guarantee that a file will be present in Opcache. Nor do we have control over the environment, and asking users (or their hosts) to fiddle with their Opcache configuration is not going to be a great user experience.

The risk of an entire cache flush in Opcache is also quite high, which can cause poor performance, and not just for page caching, but for the entire PHP application.

The potential gains however should not be neglected. Even with files being present in the Linux kernel disk cache, there’s still some overhead, which could likely be mitigated by using shared memory. If you’d like some further reading about PHP’s Opcache, here’s a great post by Julien Pauli.

If you’d like to follow my progress on the page caching plugin I’m building, as well as other WordPress related tips, benchmarks and experiments, consider subscribing!