I read articles about web performance and scaling almost every day, and when it comes to caching, the vast majority of them promote tools like Redis and Memcached, which are really fast, in-memory key-value stores.

Their performance metrics, the requests per second, how easy it is to scale them and all their great features, will often overshadow the fact that these are services designed to run on remote servers.

In a single-server setup they will very likely hurt your performance, compared to simpler tools like reading from and writing to the local filesystem, with enough RAM to utilize for the Linux kernel page cache of course. RAM is still faster than SSD or NVMe, nothing changed about that.

The Benchmark

I used Sail to provision a single-core Intel CPU server with 1G of RAM on DigitalOcean. I installed a Redis server (5.0.7, jemalloc-5.2.1) and a Memcached server (1.5.22) locally side-by-side and made sure they’re both running.

I wrote a simple mu-plugin, which on init writes some data to a Redis key, a Memcache key and a file on disk:

$redis = new Redis;

$redis->connect('127.0.0.1', 6379);

$redis->set( 'foo', 'hello world' );

$memcached = new Memcached;

$memcached->addServer( '127.0.0.1', 11211 );

$memcached->set( 'foo', 'hello world' );

file_put_contents( '/tmp/foo.txt', 'hello world' );I also made sure the /tmp directory was actually part of the filesystem on disk and not an in-memory tmpfs. I then ran some gets in a loop:

for ( $i = 0; $i < 10000; $i++ ) {

$r = $redis->get( 'foo' );

$m = $memcached->get( 'foo' );

$f = file_get_contents( '/tmp/foo.txt' );

}And finally closed the Redis and Memcached connections, and terminated the PHP script:

$redis->close();

$memcached->quit();

exit;I used the Sail profiler to run this code through Xhprof a few times. The results didn’t vary too much, here’s an average run:

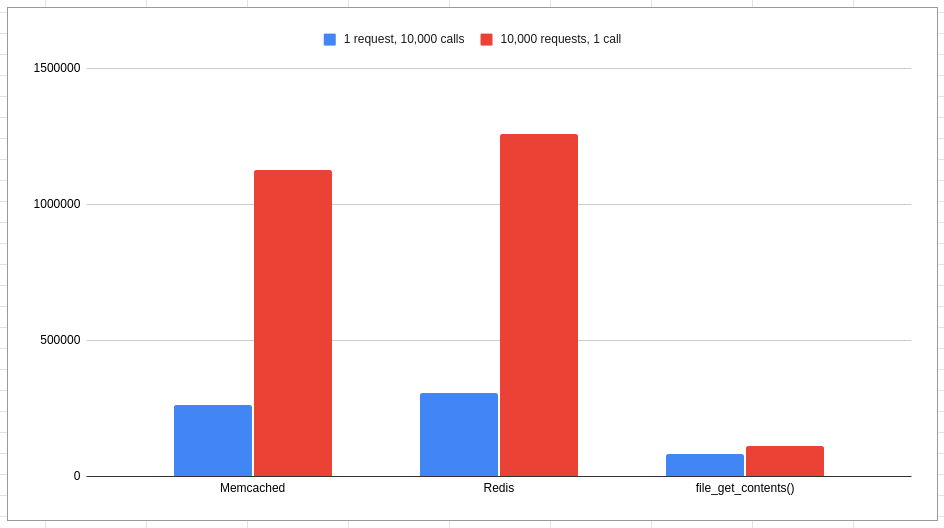

Memcached was slightly faster than Redis (263 ms vs 305 ms), but they’re both way slower than PHP’s file_get_contents(), which clocked in at only 81 ms, so about 3x faster than Memcached or Redis.

More requests = more obvious

In a page caching plugin context, you’re more likely to run 10,000 requests with a single or a couple of cache gets, rather than a single request with 10,000 gets, which means that Redis and Memcached will also pay the penalty of connecting to their corresponding servers and closing the connections, on every single request.

I’ve altered the test to simulate that, by creating a new Redis object and a Memcached object inside the loop, as well as closing the connections inside the loop as well, which now looked like this:

for ( $i = 0; $i < 10000; $i++ ) {

$redis = new Redis;

$redis->connect( '127.0.0.1', 6379 );

$r = $redis->get( 'foo' );

$redis->close();

$memcached = new Memcached;

$memcached->addServer( '127.0.0.1', 11211 );

$m = $memcached->get( 'foo' );

$memcached->quit();

$f = file_get_contents( '/tmp/foo.txt' );

}The results were in line with what was expected:

Worth noting, that the Memcached::addServer method, unlike the Redis counterpart, doesn’t actually establish a connection, but the next ::get() call does, which is why it takes longer than in the previous test.

- Memcached addServer(), get(), quit(): 1,127 seconds

- Redis connect() + get() + close(): 1,258 seconds

- file_get_contents(): 0,110 seconds

And just to verify I wasn’t hitting any internal PHP cache in file_get_contents(), I used strace to trace all the system calls in the running php-fpm child into a file. It did, in fact, access the filesystem over 10,000 times using the openat() system call:

$ pgrep php-fpm -u www-data

113806

$ strace -p 113806 -o trace.txt

$ cat trace.txt | grep openat | wc -l

10071

# cat trace.txt | grep openat | tail

openat(AT_FDCWD, "/tmp/foo.txt", O_RDONLY) = 6

openat(AT_FDCWD, "/tmp/foo.txt", O_RDONLY) = 6

openat(AT_FDCWD, "/tmp/foo.txt", O_RDONLY) = 6

...So with this test, using the filesystem is 10x faster than Redis or Memcached. Obviously we need a chart for this, in microseconds, lower is better:

In the real world however, it’s not all that obvious, and probably not that big of a deal. If your page caching plugin is using Redis or Memcached, there’s no need to rush to switch to the local filesystem. You’re very unlikely to witness a tenfold increase in performance.

Add Nginx and PHP-FPM

In my next test I took the code out of the loop, and split it into three files — memcached.php, redis.php and filesystem.php. As a baseline, I’ve also created an empty.php file which simply called exit. I then used Hey to run some requests.

The main difference between these tests and the previous ones, is that previously we had metrics for just the function calls that we’re interested in. In the following tests however, we’re adding a lot more things to the mix, including Nginx, PHP-FPM and Hey itself.

At 10,000 requests with no concurrency, these were the averages:

- empty.php: 3200 requests per second

- filesystem.php: 2900 requests per second

- memcached.php: 1900 requests per second

- redis.php: 1800 requests per second

I’ve added a concurrency level of 50. Here’s how things changed at 10,000 requests:

- empty.php: 2200 requests per second

- filesystem.php: 2100 requests per second

- memcached.php: 1400 requests per second

- redis.php: 1400 requests per second

In these tests file_get_contents() was only about 50% faster than Redis and Memcached, which is less than I expected, but still a win for the local filesystem.

Again, these numbers are affected by things like Nginx, SSL termination, the HTTP protocol, the FastCGI protocol, PHP-FPM spawning children, loading and parsing PHP files, working with Opcache, etc. So not a perfect comparison by any means, but much closer to what you could expect in the real world.

Conclusion

Is Redis and Memcached over-hyped then? Not really, no. Both are great pieces of software when used correctly, and turns out a single-server application is not the best environment for them to thrive.

But let’s face it, in the world of WordPress the majority of applications are single-server. And when struck with success, the easiest and most cost-efficient scaling path is always “up” (better hardware) rather than “out” (more servers).

I’m doing various benchmarks as part of building my own caching plugin for WordPress and Sail CLI. If you’re not familiar with Sail, it’s a free and open source CLI tool to provision, deploy and manage WordPress applications in the DigitalOcean cloud. Check it out on GitHub.

Don’t forget to subscribe!